Intro

If you’ve played with vLLM locally you already know how fast it can crank out tokens. But the minute you try to serve real traffic with multiple models, thousands of chats, you hit the same pain points the community kept reporting:

| ⚠️ Pain point | What you really want |

|---|---|

| High GPU bill | Smarter routing + cache reuse |

| K8s YAML sprawl | One-command Helm install |

| Slow recovery when a pod dies | Automatic request migration |

| Long tail latencies | KV-cache pre-fetch & compression |

Topic of the Day

💡 vLLM Production Stack tackles all of the above with a community-maintained layer that wraps vanilla vLLM, adds a Python-native router, LMCache-powered KV-cache network, autoscaling hooks and Grafana dashboards—all deployable in a single Helm chart. Let’s dive into it!✍🏻

While authored independently, this series benefited from the LMCache‘s supportive presence & openness to guide.

What is vLLM Production Stack ?

The Production-Stack is an open-source Kubernetes-native, enterprise-ready inference setup of vLLM, maintained by LMCache that lets you scale from a single node to a multi-cluster deployment without changing application code. Here’s a quick look at its Key Components:

- Serving Engine: The vLLM core component that runs different LLMs

- Request Router: Directs requests to the appropriate backends based on routing keys or session IDs

- Observability Stack: Monitors metrics through Prometheus and Grafana (i.e time to first token TTFT, latency)

- Persistent Storage: Stores model weights and other required data

- KV Cache Offloading: offloads cache from GPU to CPU or disk, enabling larger context and higher cache hit rates.

Production-stack benefits

| Pillar | Capabilities |

|---|---|

| User-Friendly |

|

| Better Performance |

|

| Production-Ready |

|

Why another “stack”?

Unlike the KServe, kubeai, aibrix that run vanilla vLLM — Production stack is the only official implementation, that is a production-tuned, featuring LMCache-powered KV cache offloading, which is one of its key performance features.

Request Flow

The diagram below walks through the entire request lifecycle through the vLLM Production Stack: the router accepts the call, discovers and selects the best engine, forwards it for inference, and streams the response back to the client.

Production-stack Architecture

The architecture follows cloud-native principles and leverages K8s for orchestration, scaling, and service discovery.

Under the Hood are three-main layers:

- Core Layer: Everything you need to serve traffic such as the Router + vLLM engines with KV-cache and LoRA .

- Integration Layer: K8s/Ray adapters slot the core into any orchestrator.✅ No refactoring/migration needed.

- Infrastructure Layer: Runs GPU/CPU pools on K8s, Ray, or bare-metal. ✅Fully platform-agnostic.

I. Serving Engine

The serving engine is the core component that runs the vLLM instances that processes inference requests.

Each serving engine deployment:

- Runs in a vLLM container, and mounts persistent volume (

/data) - Loads a model from a Hugging Face or local storage

- Exposes Endpoints:

- API endpoint (

port 8000) - Endpoint for monitoring

/health

- API endpoint (

- Includes multiple configurations such as:

| Component | Configuration |

|---|---|

| Model Execution |

|

| LMCache Integration (Optional) | KV cache offloading (if enabled):

|

II. The Router

The router is responsible for directing requests to the appropriate serving engine based on configured routing logic that:

- Discovers engines via K8s API or static backend list

- Routes requests (round-robin or session-sticky)

- Exports performance metrics

- Exposes an OpenAI-compatible proxy endpoint

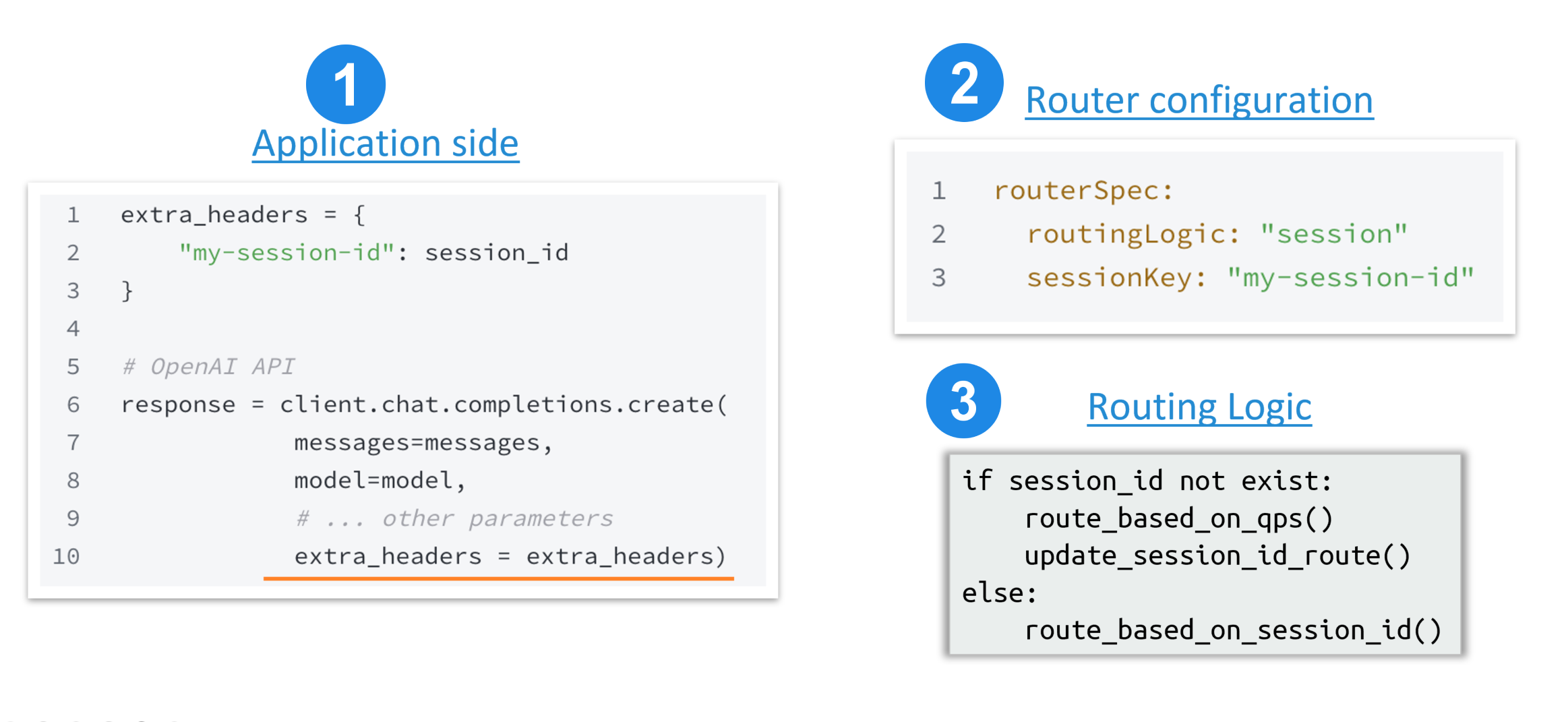

1. Routing logic

The router’s Smart routing implements two logics:

- Model routing filters the model from the available backends based on the requested model.

- Instance routing uses routing algorithms and live metrics to pick the best vLLM instance (replica) for each request.

2. Instance routing algorithms

The main Algorithms are Round robin, Session stickiness (session bound), and Prefix-aware load-balancing (WIP).

A. Instance Affinity – Session Stickiness

Session stickiness hashes session IDs to keep traffic on the same backend; if there’s no ID, it falls back to QPS routing

B. Instance Affinity – Prefix-aware

This feature is still in progress (see PR239) and relies on a chunk-based hash-trie built from each request body.

The Workflow operates as follows:

1️⃣. Chunk the request

2️⃣. Hash each chunk

3️⃣. Find the longest prefix match in the trie

4️⃣. Update the trie with the new hashes.

3. Fault tolerance (request migration)

When a vLLM instance dies, request migration shifts existing session to a new instance with its KV cache intact.

🧰 Other advanced routing features

✍🏻Request-rewrite module:

🔹 Adds Personal Identifiable Information (PII) protection before the request hits the model.

🔎Routing extensions:

🔹 Semantic caching caches responses based on semantic similarity of requests .

🔹 KV cache aware routing for smarter instance picks based on cached tokenized prompt.

III. KV-Cache Network

As explained in our previous post KV cache speeds-up inference, whether the cache is served locally or remotely.

LMCache’s KV-cache network exposes a unified sharing interface and serves as the backend for all cache optimizations, so every instance can reuse the same cache while Speeding up KV-cache processing.

1. KV Cache Delivery- Pooling Mode

In pooling mode, data is stored and retrieved remotely in stores like redis DB through LMCache interface.

2. KV Cache Delivery- Peer-to-Peer Mode

Here, data is stored locally and shared between instances, while redis acts as a lookup server only storing metadata.

Other LMCache advanced optimizations

1️⃣ KV cache blending

When chats have different input documents. Cacheblend concatenate their kv caches into one (useful in RAG setups).

2️⃣KV cache compression

Uses CacheGen algorithm to compress a KV cache into more compact bitstream representations for a faster transfer.

3️⃣ KV cache translation

Translation allows kv cache sharing between different LLMs (i.e Llama3 🔀 Mixtral), which is ideal for AI agents.

4️⃣ Update KV cache

LMCache can even offline-update KV entries, so the next time the cache is reused the LLM returns smarter answers.

5️⃣. KV Cache Prefetching

Prefetching lets the router pull remote caches to engines before request execution, keeping GPU busy instead of idle.

IV. The observability stack🔎

The observability stack provides monitoring capabilities for the vLLM Production Stack through Prometheus & Grafana.

All configuration details can be found here where the stack

- Collects metrics from the router and serving engines

- Provides custom metrics through the Prometheus Adapter

- Monitors and feeds the Grafana dashboard with real-time key model performance indicators including:

| vLLM Grafana Metric | Description |

|---|---|

| Available vLLM Instances | Displays the number of healthy instances. |

| Request Latency Distribution | Visualizes end-to-end request latency. |

| Time-to-First-Token (TTFT) Distribution | Monitors response times for token generation. |

| Number of Running Requests | Tracks the number of active requests per instance. |

| Number of Pending Requests | Tracks requests waiting to be processed. |

| GPU KV Usage Percent | Monitors GPU KV cache usage. |

| GPU KV Cache Hit Rate | Displays the hit rate for the GPU KV cache. |

V. One-click helm install

To top it off, vLLM Production-Stack ships as a one-command Helm chart, turning a slim values.yaml into a fully wired K8s deployment; pods, services, autoscalers, and dashboards included.

To get started with the vLLM Production Stack, you’ll need:

- A running Kubernetes cluster with GPU support

- Helm installed on your machine

- kubectl installed and configured to access your cluster

helm install … gives you router, vLLM, LMCache, autoscaler, ServiceMonitor objects and dashboards out of the box.

$ git clone https://github.com/vllm-project/production-stack.git

$ cd production-stack/

1️⃣ # Add chart repo

helm repo add vllm https://vllm-project.github.io/production-stack

2️⃣ # Spin up a minimal stack (values file ships with the repo)

helm install vllm vllm/vllm-stack -f tutorials/assets/values-01-minimal-example.yaml- Expose the router locally

# local port forwarding

$ kubectl port-forward svc/vllm-router-service 30080:80

$ curl http://localhost:30080/models | jq .data[].id

"TinyLlama/TinyLlama-1.1B-Chat-v1.0"Benchmarks— Show me the numbers

A public benchmark with 80 concurrent users chatting to a Llama-2-8B replica (2× A100 80GB) shows that Production Stack yields lower latency and Higher Speed.

TL;DR

If you need vLLM performance and SRE-grade reliability, the production stack saves you weeks of YAML tweaking.

- Drop it into any GPU-enabled K8s cluster

- Point your OpenAI-compatible client at the router

- and get back to shipping features instead of herding pods.

🚀Coming Up Next

Now that we’ve seen how the vLLM Production-Stack turns vanilla model serving into an enterprise-grade platform.

In the next blog post, we’ll dive into the Helm chart configuration—walking through the key settings in values.yaml and explore deployment recipes ranging from a minimal setup to multi-cloud roll-outs (EKS,AKS,GKE).

Stay tuned for Part 2🫶🏻

Reference

Run AI Your Way — In Your Cloud

Want full control over your AI backend? The CloudThrill VLLM Private Inference POC is still open — but not forever.

📢 Secure your spot (only a few left), 𝗔𝗽𝗽𝗹𝘆 𝗻𝗼𝘄!

Run AI assistants, RAG, or internal models on an AI backend 𝗽𝗿𝗶𝘃𝗮𝘁𝗲𝗹𝘆 𝗶𝗻 𝘆𝗼𝘂𝗿 𝗰𝗹𝗼𝘂𝗱 –

✅ No external APIs

✅ No vendor lock-in

✅ Total data control

𝗬𝗼𝘂𝗿 𝗶𝗻𝗳𝗿𝗮. 𝗬𝗼𝘂𝗿 𝗺𝗼𝗱𝗲𝗹𝘀. 𝗬𝗼𝘂𝗿 𝗿𝘂𝗹𝗲𝘀…

🙋🏻♀️If you like this content please subscribe to our blog newsletter ❤️.